A computer is a general-purpose machine that processes data according to a specific set of instructions, through a serious of logic gates (AND, OR, NOT, etc). The instructions the computer uses are either stored permanently (in read-only memory, or 'ROM') or temporarily (in random-access memory, or 'RAM').

The basic components are simple:

Abstractly, the essential elements of a computer comprise:

Almost all personal computers, workstations, minicomputers, and mainframes are based on the von Neumann design principle of stored programs. Thus computers became general-purpose problem solving machines.

Von Neumann's architecture describes a computer with four main sections: the Arithmetic and Logic Unit (ALU), the control circuitry, the memory, and the input and output devices (collectively termed I/O). These parts are interconnected by a bundle of wires, a "bus."

Improving performance in computers then is a matter of:

In turn, all of these improvements require the ability to move and switch electrons at increasing speeds and increasing volumes (e.g. density of carriers). The speed of the system bus is very important since if it is too slow then the CPU may have its speed restricted by having to wait for data. You do not want to be I/O limited, yet many machines are.

Nowadays, most computers appear to execute several programs at the same time. This is usually referred to as multitasking. In reality, the CPU executes instructions from one program, then after a short period of time, it switches to a second program and executes some of its instructions. This small interval of time is often referred to as a time slice. This creates the illusion of multiple programs being executed simultaneously by sharing the CPU's time between the programs.

However, as processor get faster, one has to concentrate on better opearting systems (anything but WinDoze) to manage all this capability. Next generation computers therefore will require next generation operating systems (OS). The big question is, will the OS be optimized for the personal computer or will the network become the computer. The latter should occur, but market economics drive the former.

The Memory Latency Problem:

Memory latency is the time it takes for a computer's central processing unit to grab a piece of information stored in random access memory (RAM)

One problem is that in conventional dynamic random access memory (DRAM) or static random access memory (SRAM), each line in a two-dimensional memory array is managed by one switch.

The farther a piece of memory is from the switch or central processing unit, the longer it takes to retrieve it. This problem is compounded by the complicated code and data structures in modern software applications that require access to a vast amount of random memory.

Reducing memory latency is one of several major challenges to developing the massively parallel computers of the Accelerated Strategic Computing Initiative ( ASCI ) beyond the next generation.

(ASCI computers are a key component of the Department of Energy's program for stewardship of our nation's stockpile of nuclear weapons. Combined with nonnuclear experiments, simulations of nuclear implosions and other phenomena in three dimensions are needed so that scientists can assure that the stockpile remains safe and secure without underground nuclear testing)

The current ASCI White computer, the most powerful in the world, operates at 12 teraops (trillion operations per second). The next-generation ASCI computers will operate in the 30 to 70 teraops range. However, the imbalance between microprocessor speed and the delivery time of information to the processor hampers performance.

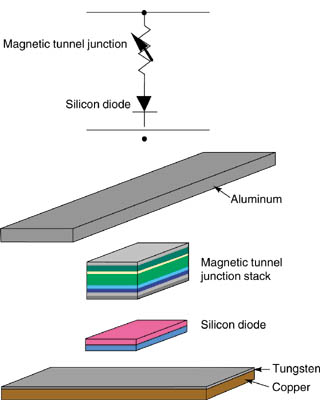

One potential solution is magnetic random access memory (MRAM). Concept is to integrate

a diode swich on top of a vertical column of MRAM. Currently memory is laid out horizontally

and this contributes to memory latency.

In addition to speeding up information access, MRAM offers several other advantages. It is immune to radiation damage, consumes little power, and continues to function over wide temperature ranges. Unlike most other forms of RAM, magnetic RAM is nonvolatile, which is to say that it retains its memory even after power is removed.

Magnetic memory has been around for a long time as cassette tapes and disk drives. But until recently, fast, high-density MRAM was not possible because the access times to read and write data were inferior to those in semiconductor-based memory. Great strides have been made in manufacturing thin-film multilayers, which are key to MRAM's operation.

Challenges remain, however. Primary among them are finding the right combination of multilayers to maximize their performance and attaching a microprocessor to the magnetic material to bring memory and processing as close together as possible

This is what is limiting your gaming performance

This is what is limiting your gaming performance

kind of frightening ...

kind of frightening ...

If the right combination of materials can be found, then a whole new generation of computing

machines will emerge, with much better performance and nearly instantaneous conversion of instructions

in memory to program execution  will your brain be able to handle this?

will your brain be able to handle this?