We will spend a bit of time on this issue now because its usually left out of the larger scale discussion but it is quite relevant. In fact, the anecdote presented in the text is apropos'

At issue here is the time efficiency of the scientist and there are two extremes which can be both good and bad.

- The NSF National Supercomputing Thing (no longer exists). The idea was that individual researchers would not have adequate access to high performance oomputing (HPC) on their campus so the NSF decides to create about 10 facilities across the country (e.g. Pittsburgh, UCSD, etc). Then the queing system got in the way; now its feasible for individual Universities to own HPC, so in the end this was a good transition.

- Univerities don't have the funds, in general, to invest in professional facilities. So what happens is that some "superstar" hire gets a large startup package to buy a unique piece of equipment that they keep behind closed doors, but only use it 10% of the time. ( There should be a policy about this - tell UM story).

On the other hand,

CAMCOR, a very good professional facility was largely due to a private donation. But has the existence of this shared facility lead to a catalytic research process within the UO or has it more enabled collaborations with outside researchers?

So one issue is how to design a research infrastructure that can better lead to colloboration and exploration of new problems? Although not intended to achieve this goal, the infrastucture below did, in fact, achieve this goal (via the mechanism of night lunch).

The book (plus GDB) raises a number of interesting policy questions regarding the establishment of scientific infrastruture and where there responsibility lies. To date, all of the following issues remain unresolved:

- How should federal agencies cooperate to provide scientific infrastructure at the national level?

- Who should pay for ongoing costs to support the infrastructure (this is mostly in the form of salaries for professional support staff)?

- If your a scientist using a national facility should you have to pay for travel and costs associated with the analysis of data acquired at the facility?

- What is the balance between individual Universities, regional facilities and national facilities? (The proposed but never implemented telescope network, supercomputing as an example of regional facilities).

- Should each federal agency have a set percentage of their annual budget directed towards infrastructure issues? What if that set amount squeezes out PI space? Should this percentage be the same across all agencies?

- How to deal with multiple univerisites using a shared facility? How are support costs determined and how is support implemented? Some of this seems to be cultural: Kitt Peak support (Americans) vs CTIO support (Chileans)

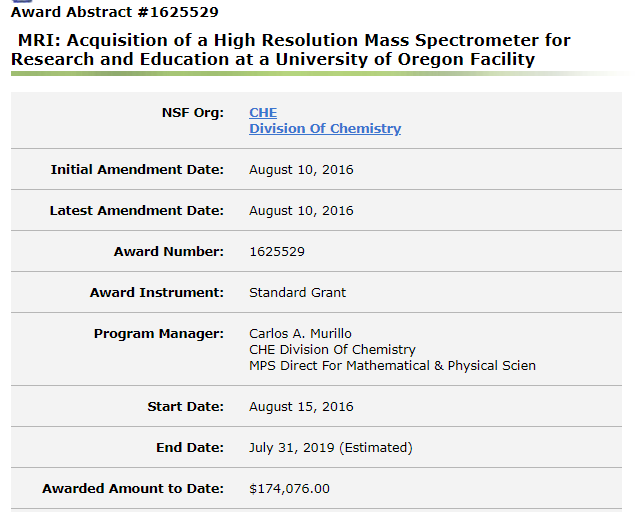

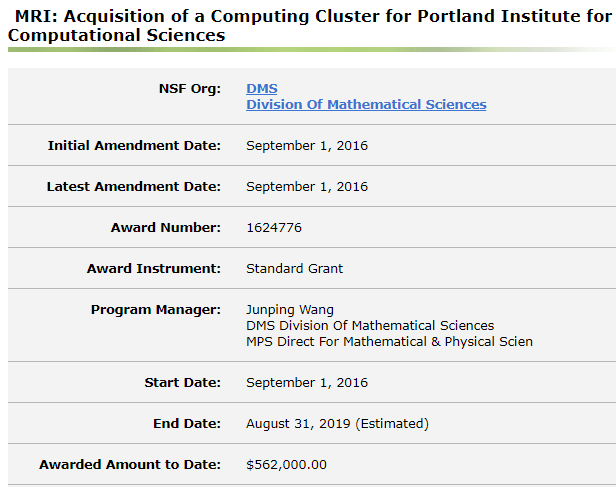

While the NSF does have the MRI (major research instrumentation - now has morphed to a new acronym) program it is really unclear how successful that program has been, particularly in the case of an MRI facility being a regional catalyst to stimulate collaborative research. Often times, MRIs are small (really too small of an award for this kind of program) for specialized equipment. For example,

ACISS; first generation HPC at UO

Overall it is unclear how successful the MRI approach has really been. The NIST facility is perhaps the best model out there - centrally located in the US and full of advanced instruments. But, NIST is part of the Department of Commerce!

An increasing problem, however, with our highly distributed research infrastructure situation is legacy costs. This has been known to be a problem for the last 25 years:

In fact, we have mostly ignored this .... and this produced an important document (the RAGS report), whose points were mostly not appreciated let alone followed:

Some relevant history which is now starting to repeat itself.

|